Welcome back beautiful people.

If you are new and haven’t yet subscribed, click here:

Thanks for giving me a shot to write to you about art. Feels good!

I am just laying down the ground work to be more tactical in the future. Right now, we want to establish the foundation for what we admire. A foundation of admiration.

Last time, we talked about the aesthetics of deterministic art systems. Things that people code top-down.

But one of the other beautiful things going on, one of the most alien and strage and bizarre, but also deeply moving and existentially compelling, is artificial intelligence generating art. I really like the term “neural art”, with an etymology in the concept of “neural network”. Other people enjoy talking about DeepDream, and DeepStyle, and GANNs, and Stable Diffusion, and generative AI, and so on.

I am not going to get the actual history right, but that doesn’t mean I can’t share my *personal* history with neural art. It started for me in seeing the original DeepDream piece from Google, when Alex Mordvintsev tuned a machine vision algorithm inside out, creating the first machine learning hallucination.

This had a profound impact on people like me, interested in high tech applied to high art, but also on the very definition of what machine learning can be used to do. You might be used to the above image, familiar with it. But if you’ve ever taken a psychedelic, you might be alarmed to see how similar a machine deconstruction of our brains is to the experience of deconstructing your visual experience chemically.

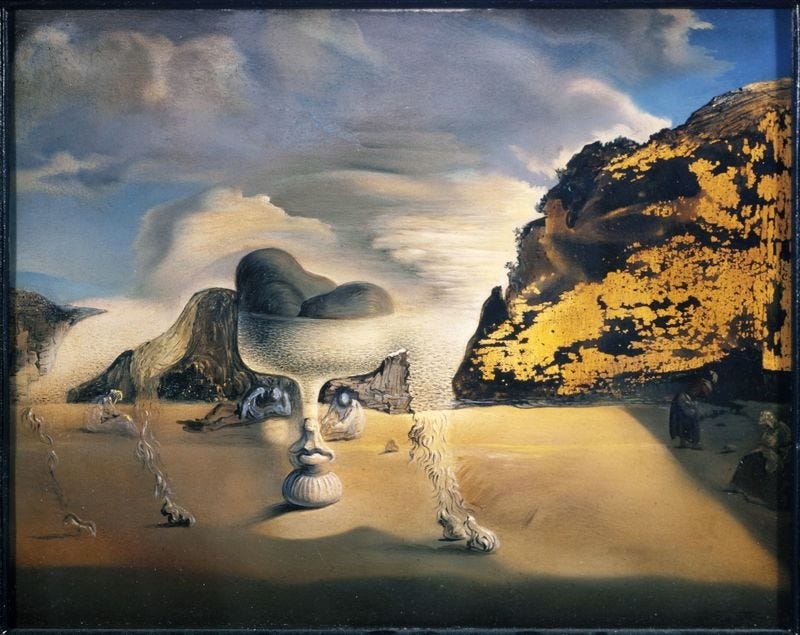

It’s uncanny. Look at Dali’s surrealism as a precursor to these expressions, projecting forward layers of imagery of the impossible. Or at psychedelic art from the 1970s, pulsating with layers of suggestion. Tell me you don’t see it in the robot’s dreams.

Or the godfather of psychedelic art, Alex Gray —

Clearly there is some connection between how image recognition alogirthms broke down when you hand-crank their parameters, and the way our own mind generates concepts, images, and meaning. That’s the biggest unlock of DeepDream.

The next break through, DeepStyle, moved away from projecting noses of dog-cats and cow-slugs all over photos, and instead into applying the styles of different artists to your images. A bunch of applications helped people take neural network layers associated with a particular visual artist, and use that as a style to format your image. The outcomes were often janky, because the underlying image structure didn’t change. But even the very early versions of this were amazing. I covered it in a piece that’s the inspiration for this very newsletter, called Human Art is Dead.

Every artist, ever, could be in your phone, ready to be deployed on any piece of visual content.

Of course now, we can do far, far more than that.

Models all the way Down

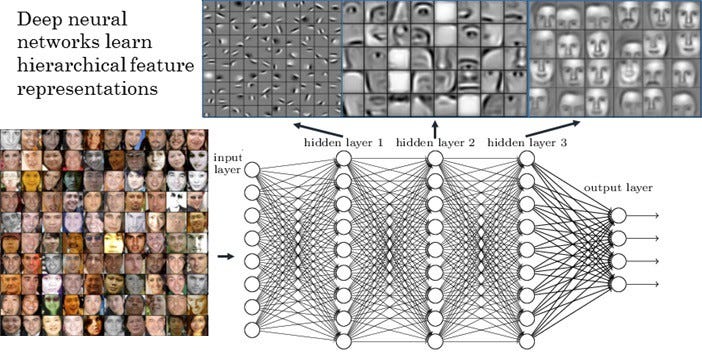

The structure of the image recognition neural network is something like this —

A giant data set of images is sliced up into features and then those features are tuned at different layers. This isn’t an AI tech blog, you’ll have to live with my description.

But there are other architectures for neural networks, like GANNs — generative adversarial neural networks.

The cartoon explanation for this is that you’ve got a generator of images, and then an adversarial editor of images. Something hallucinates images, and something else selects them for fidelity. We get a nice concept from this model that wasn’t coming through the Deep approaches, and that’s the concept of latent space.

This nightmare fuel is the mathematical interpolation between different visual data. The algorithm is able to “fill in the blanks”, also known as “go to hell and back with unholy portals”, between various visual artifacts. Traversing latent space is an incredible thing, because it is here that many of the best AI artists find artifacts that blow your mind.

Of anyone in AI art, I have to shout out to Mario Klingemann, who single-handedly stood up the Tezos NFT ecosystem with his promotion of Hic et Nunc. Mario was massively early in AI art, and his works are eerie, moving, and profound.

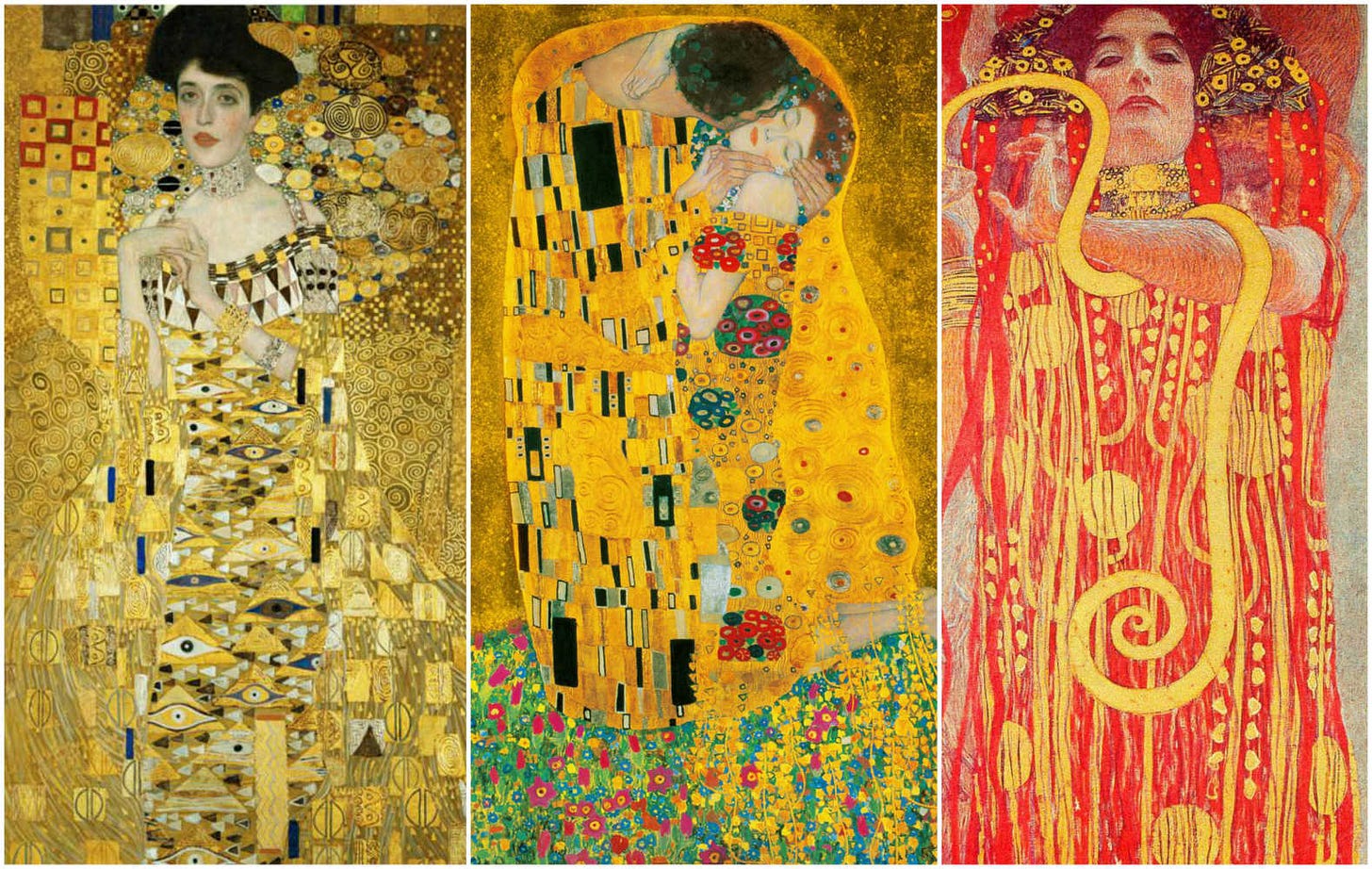

These latent space photographers, finding mathematical accidents resembling otherwordly renderings, have made amazing images. They remind me of Remedios Varos, or perhaps Gustav Klimt, combining wild incredible textures with glimpses of the human inside.

There are so many incredible artists in this space, and we can’t cover them all. I was blown away by Anna Ridler’s tulips, Helena Sarin’s work, Claire Silver, Gene Kogan, and pretty much anything that you can find on BrainDrops.

Some of my favorite pieces come from Ganbrood —

and from Ivona Tau, who really shows you what it is like to transverse a latent space.

or the wild portraits by Lemuet, channeling Dali in all his glory.

You must, MUST, see them in motion.

You can find an echo back to the 1500s and Giuseppe Arcimboldo in this work.

These fantastical discoveries are amazing. But they are only the entry point for what is to come next.

Internet scale models

You are probably familiar at this stage with DALL-E and GPT-3 and Stable Diffusion. The short of it that instead of working on a large image data set, these models ingest the entire content of the Internet. And instead of requiring the magic of a gifted computer scientist to navigate the latent space, a chat GPT-3 equivalent is attached as a navigator of the outputs.

So you can just type in what you want, and get results that match those concepts — something impossible in the prior versions.

Further, these models work sort of like sculptures. They start out with noise and then bring out regressively more fine versions of images fitting the natural language version of the description.

The results have been insane — anyone can generate a high fidelity rendering of pretty much any desired output. There’s almost no point showing artwork by any particular person, but here are some things I’ve bookmarked as being particularly clever in the Facebook groups where such art is shared.

To me, these last works are all amazing. They are illustration and, perhaps, cartooning or decoration at its finest.

I think we are yet to find a truly expressive voice for the current generation of AI art. The language is too concrete, too literal. It is in the breaking of its systems and the exploration of its frontiers that we will see novelty.

Or perhaps you know one that you can share? Leave your favorite in the comments and see you next time!

and don’t forget to share with others.